Despite unprecedented investment in AI technologies, only about 10% of organizations report significant ROI from advanced AI initiatives. Nearly half expect meaningful returns within three years, yet most face hidden organizational barriers that stall impact. The problem is rarely the technology itself—it’s often about people, structure, and workplace reality.

The Adoption Paradox

In boardrooms, the refrain remains familiar: “We’ve poured millions into AI. Why isn’t transformation happening?”

What leaders often miss: Employees aren’t resisting AI itself – they’re resisting the uncertainty, loss of agency, and lack of trust that come with abrupt top-down shifts.

As Peter Senge observed in The Fifth Discipline, “People don’t resist change. They resist being changed.” Your workforce isn’t afraid of AI itself. They’re afraid of being changed by someone else’s timeline, without their voice in the decision.

In his latest work Reshuffle: Who Wins When AI Restacks the Knowledge Economy, Sangeet Paul Choudary, argues that AI’s true impact is not best measured by how intelligent it is compared to humans, but by its ability to reshape industries and organizational systems, fundamentally changing how work is coordinated and value is created. Instead of seeing AI as merely a productivity tool, businesses must recognize it as a disruptive force that reorganizes workflows, power structures, and competitive dynamics.

Organizations successfully scaling AI adoption aren’t the ones with the best models or budgets. They’re the ones that fundamentally rewired how humans relate to the technology and how the system works around it. And that rewiring requires understanding four interconnected barriers that block adoption far more effectively than any technical constraint.

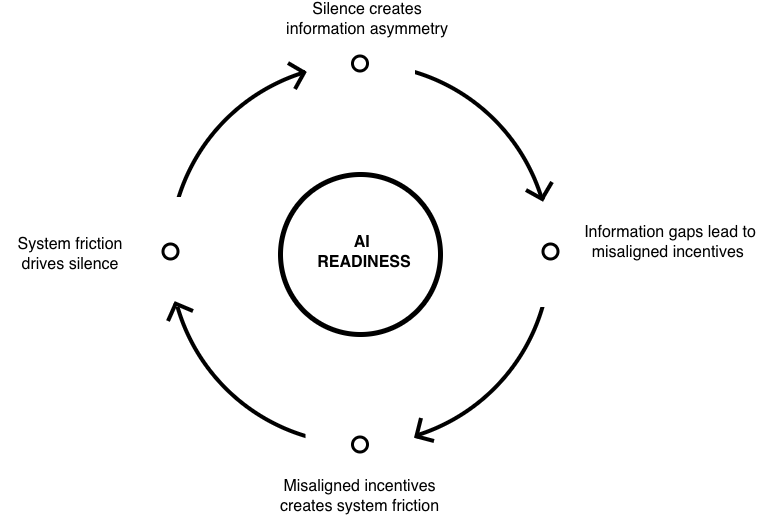

The AI Readiness Flywheel : Four Barriers

- Silence

- Information

- Incentive

- System

These barriers form a complete, self-reinforcing cycle where each one creates and reinforces the others. Understanding this flywheel is essential – you cannot solve one barrier without addressing all four simultaneously.

Silence: What Employees Won’t Tell You

Imagine you work at a company that just mandated AI adoption. You cautiously start using tools. But here’s what happens next: you notice you’re more productive. So you think – “Should I tell anyone?” And that moment of hesitation reveals everything about why your AI adoption is stalling.

The ‘AI Shame’ Epidemic

The statistics are stark:

- 53.4% of C-suite executives hide their AI usage

- 48.8% of general employees actively conceal when they use AI

- 62% of Gen Z workers hide their AI usage to avoid judgment

The numbers don’t capture the real cost—what goes unshared. When leaders use AI privately but never discuss it, they send the signal: “Using AI might make you look bad.” Nearly half the workforce operates in information asymmetry, using the tools but pretending otherwise, driving the most valuable learning underground.

Why Smart People Hide Intelligence

This isn’t about job insecurity alone. Deeper research reveals a status anxiety so profound that it’s reshaping how work actually gets done:

- 63% of workers fear that admitting AI use makes them seem unmotivated or unskilled

- Medical specialists ignore AI diagnostic recommendations to protect professional credibility

- Software engineers develop code with AI assistance but claim it’s their own work

- Financial analysts use AI insights but bury them in human-generated reports

In several organizations, backchannel Slack groups and hidden workflow automations circulate quietly, giving teams an edge – but also locking progress inside invisible lanes, hidden from the leaders who most want to scale it. These are dignity problems, not technical ones.

The Cascading Silence

When your best people hide their AI usage:

- Knowledge never surfaces: The engineer who figured out an elegant way to use AI for code review keeps it to herself. Her peers never learn. The organization never standardizes the practice.

- Management flies blind: Leaders can’t measure adoption because adoption is happening invisibly. They commission surveys that show “51% of frontline workers use AI regularly” and have no idea whether that’s true or whether people are lying to protect themselves.

- Shame becomes structural: Each hidden behavior reinforces the next one. “If everyone else is hiding it, maybe I should too.” The silence becomes the organizational default.

Your silence layer is deeper than you think.

Information: Knowledge Gaps Create False Barriers

According to Slack’s Fall 2024 Workforce Index, 61% of desk workers have spent fewer than five hours learning about AI, and 30% report having received no AI training at all – representing a significant gap that contributes to the Information barrier. What is less often explored, however, is the impact this gap has on how employees make decisions regarding AI adoption and use.

The Polarization Trap

In the absence of practical knowledge, organizations split into two camps:

- The Dismissives: “This is just hype. AI can’t actually do anything useful. Give it five years.”

- The Evangelists: “AI is going to transform everything overnight. We need to be ready for anything.”

Neither group can hold a productive conversation with the other. Both exist in the same company. And your adoption rate suffers because middle management is caught between two religiously opposed factions.

Neither can hold a productive conversation with the other.

In one digital services firm, frontline staff absorb AI skills through global user chat forums or side projects, while formal company learning stays stuck on generic “AI awareness” modules.

As a result, “AI adoption” means high-level e-courses for compliance and real progress happening out of sight. Employees define safe ground not by job title or seniority, but by online peer reputation or visible results.

The Real Training Crisis

Most organizations treat AI training much like traditional IT onboarding – a one-off certification designed to fulfill a checklist requirement.

However, employee feedback tells a different story:

- 60% report that learning to use AI tools takes longer than performing tasks manually,

- fewer than 4% of entry-level employees receive meaningful AI training compared to 17% of executives,

- and the majority of training provided is generic (such as “How to Use ChatGPT”) rather than tailored to specific roles (such as “Applying AI to Enhance Audit Processes”).

As a consequence, employees face both an overload of generic information and a lack of relevant, role-specific knowledge.

This situation reflects a common pitfall: organizations often develop visions for AI adoption that lack a deep understanding of the systemic forces required to achieve those goals. Without aligning AI strategies to the actual workflows and systems where employees operate, predictions and plans remain disconnected from reality.

What Actually Works: From Policies to Questions

DBS Bank didn’t solve this with a longer training manual. They created the PURE framework – four simple questions that every employee could ask when considering an Data & AI use case:

- Is this use purposeful and meaningful?

- Will the results surprise (and potentially harm) customers?

- Does this respect customers and their data?

- Can we explain what this AI is doing?

Instead of 100-page governance documents, four questions. Instead of compliance experts making decisions, frontline employees making decisions with guardrails.

By 2023, this approach helped DBS generate $274 million in AI-driven value.

This demonstrates that knowledge barriers dissolve when abstract principles are translated into concrete, actionable questions people can use in their daily work. It aligns with the insight that organizations must view AI not merely as a task automation tool but as an engine that reorganizes workflows – requiring workflows to be intelligible and transparent to all participants.

Incentive: When Efficiency Threatens Identity

Here’s a question that almost nobody asks explicitly: If using AI makes my team smaller, more efficient, or less needed – why would I help accelerate that?

Yet this is precisely the question your adoption strategy is asking people to answer in the negative without acknowledging that’s what’s happening.

Fault-Finding Defense

At Beta Insurance, when AI systems were introduced to process claims, employees did not actively resist the technology. Instead, they set extraordinarily high standards for its performance.

- AI accuracy had to reach 99.8% to be considered trustworthy (while human adjusters averaged 96.2%).

- Processing times needed to improve by 50% (though conservative pilots showed only a 30% reduction).

- False positives had to be nearly eliminated despite the inherently noisy nature of insurance data.

From an external viewpoint, these demands appeared to be a commitment to quality. However, internally, they functioned as a defense mechanism. As a result, the project faced delays, costs escalated, and the organization was able to justify postponing wider AI deployment by claiming that “the technology wasn’t ready yet.

Making Promises Credible

At Acme e-Commerce, leadership implemented a transparent, verifiable commitment to increase total labor spending by 1% annually.

That 1% number matters because it’s:

- Easy to audit: Anyone can check the payroll ledger.

- Hard to manipulate: You can’t redefine “labor spending” to hide cuts.

- Credible: It’s a promise you can actually break people for breaking.

Trust among team members is critical for successful collaboration. However, this trust can erode when incentive structures do not align with collective goals. By tying growth investments explicitly to efficiency gains, Acme transformed AI from a cost-reduction threat into an opportunity amplifier. Efficiency didn’t mean layoffs; it meant expansion into new markets and roles.

Sangeet emphasizes in Reshuffle that AI shifts power dynamics and redistributes value. When incentives don’t explicitly reinvest efficiency gains back into workers and growth, people rationally conclude they’re being disempowered rather than enabled. The incentive barrier is ultimately about whether people believe the organization views them as partners in value creation or as costs to be eliminated.

The Hierarchy Shock

AI creates organizational turbulence that traditional incentive structures can’t absorb. When a programmer with two years of experience writes better code than a ten-year veteran (because they use AI), what does your compensation structure do?

In one software firm, junior developers were outperforming seniors, but promotion and compensation still followed tenure. The message was clear: Master the new technology and stay in your box.

In another organization, they flipped the model. Job grades expanded. Promotion cycles shortened from every five years to biannual. Competency models explicitly weighted AI mastery. Suddenly, learning the new tools wasn’t a threat to career progression, it was the accelerant.

When Managers Become Gatekeepers (And Guardians of Scarcity)

Here’s an uncomfortable dynamic: Managers often control whether their teams get access to AI tools, training, and time to experiment. And their authority, bonuses, promotions, headcount, often depends on managing large teams.

When AI threatens to reduce headcount, that same manager has a personal financial incentive to slow adoption.

At Gamma Translation Services, one department dragged its feet on automation not because the technology wasn’t ready, but because the department head’s prestige and compensation were tied to managing 25 people. Automation meant managing 12.

How do you solve this? Not with better messaging about “AI isn’t about layoffs.” You solve it by restructuring what success looks like. OPPO, the smartphone maker, held an internal AI competition where every department could access the same tools, and results were ranked publicly. Suddenly, managers didn’t have a choice: Champion AI or risk public humiliation when your team underperforms.

The competition reframed success from managing large teams to enabling teams to achieve more with less.

System: When Local Optimization Creates Global Failure

This is the hidden killer that nobody talks about at AI conferences. Your AI initiatives are working perfectly, and your business is stalling.

The Bottleneck Migration

At Titan Automotive, a major manufacturer invested heavily in generative AI to accelerate software development. Design iterations sped up, code generation improved, and feature testing times shortened significantly. Individual software team productivity increased by 30 to 40 percent.

However, vehicle production timelines did not improve; in fact, they worsened.

The root cause was clear: Titan optimized its software development process without simultaneously upgrading the hardware manufacturing pipeline. Software teams were able to iterate three times faster but were constrained by hardware manufacturing processes that remained unchanged – a classic bottleneck. It was as if a racetrack had been built down only one side of the production line.

This case exemplifies the importance of systems thinking. Without understanding your organization as an interconnected system, local AI success becomes global friction.

AI should be viewed as an engine for restructuring entire systems, not as a tool for automating isolated tasks. When you deploy AI in one workflow without reorchestrating the entire coordination system, you don’t get net efficiency gains – you get bottleneck migration.

Siloed Excellence, Systemic Mediocrity

Organizations often optimize AI locally within teams, but fail to coordinate across departments, causing systemic friction.

- Marketing adopts generative AI for campaign creation, but sales enablement, customer success, and product marketing never integrate with those workflows, so campaigns don’t align with what’s being sold.

- Finance automates reconciliation with AI, but accounting, tax, and compliance are still on manual processes, so efficiency gains get absorbed by handoffs instead of reducing overall finance operations time.

- Customer service deploys AI chatbots, but they’re built to handle only 40% of inquiries, routing the rest to humans with zero context, creating more work instead of less.

In each case, individual teams optimize locally, but the organization as a whole experiences friction due to lack of coordination and integration. Before deploying AI broadly, it’s crucial to understand where information flows freely and where it stalls. Real value comes from enabling coordination across the organization, not just automating isolated tasks.

The Accuracy Trap: When Perfect Becomes Destructive

Here’s a paradox: AI’s strength is precision. But organizational harmony sometimes requires ambiguity.

FreshMart, a rapidly growing grocery e-commerce company, deployed AI to trace customer complaints to their root cause.

When a customer received spoiled fruit, AI could pinpoint exactly what went wrong: Did procurement buy poorly? Did storage mishandle the product? Did delivery cause the damage?

For the first time, accountability was precise. Perfectly attributable to one department.

Departments hated it.

What had once been shared responsibility became explicit blame. Department heads who’d operated under comfortable ambiguity now found themselves publicly exposed for specific failures. The binary nature of algorithmic judgment – assigning full responsibility to one side – ignored the gray areas of real operations.

Disputes escalated. Relationships fractured. And ironically, service quality got worse because departments were spending energy defending themselves rather than solving problems.

Closing the loop: How System Friction Feeds Back to Silence?

Here’s the critical connection that completes the flywheel: When employees experience this system-level precision and blame, they respond by hiding their struggles and concerns. New behavior emerges: “Admitting AI involvement means getting blamed” → People hide their use of AI → Silence deepens.

After FreshMart’s precise accountability system, other departments learned the lesson. They began concealing which AI systems they used, which decisions were AI-informed, and which problems were caused by algorithmic decisions. System precision drove organizational silence.

Similarly, Titan automotive’s software team experiencing bottleneck frustration stays silent about the coordination problems. Why? Because “If we say hardware is the bottleneck, leadership might think we’re resisting change or not adapting fast enough.” System friction leads to defensive silence.

AI adoption barriers are not technical problems. They are human and organizational problems rooted in:

- What people fear to say (Silence)

- What people don’t know (Information)

- How people are rewarded (Incentives)

- How work actually flows (Systems)

These barriers don’t exist in isolation. They form a complete, self-reinforcing flywheel where each barrier strengthens the others. Organizations that ignore this interconnection don’t fail to adopt AI – they fail to scale it, wasting investment and momentum. Understanding these four barriers individually is insufficient. You must grasp how they amplify one another.